ML & Vision

-

WWDC23 -

13:47

13:47

Detect animal poses in Vision

Go beyond detecting cats and dogs in images. We'll show you how to use Vision to detect the individual joints and poses of these animals as well — all in real time — and share how you can enable exciting features like animal tracking for a camera app, creative embellishment on an animal photo,...

-

16:50

16:50

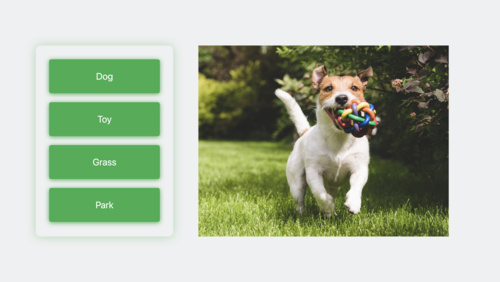

Discover machine learning enhancements in Create ML

Find out how Create ML can help you do even more with machine learning models. Learn about the latest updates to image understanding and text-based tasks with multilingual BERT embeddings. Discover how easy it is to train models that can understand the content of images using multi-label...

-

19:56

19:56

What’s new in VisionKit

Discover how VisionKit can help people quickly lift subjects from images in your app and learn more about the content of an image with Visual Look Up. We'll also take a tour of the latest updates to VisionKit for Live Text interaction, data scanning, and expanded support for macOS apps. For more...

-

18:38

18:38

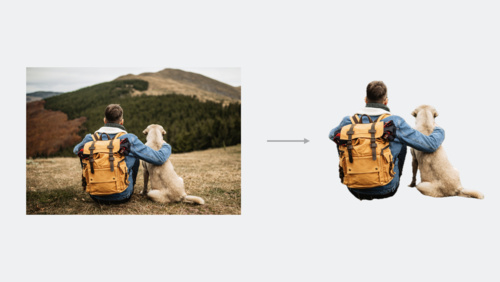

Lift subjects from images in your app

Discover how you can easily pull the subject of an image from its background in your apps. Learn how to lift the primary subject or to access the subject at a given point with VisionKit. We'll also share how you can lift subjects using Vision and combine that with lower-level frameworks like Core...

-

14:25

14:25

Explore Natural Language multilingual models

Learn how to create custom Natural Language models for text classification and word tagging using multilingual, transformer-based embeddings. We'll show you how to train with less data and support up to 27 different languages across three scripts. Find out how to use these embeddings to fine-tune...

-

14:38

14:38

Explore 3D body pose and person segmentation in Vision

Discover how to build person-centric features with Vision. Learn how to detect human body poses and measure individual joint locations in 3D space. We'll also show you how to take advantage of person segmentation APIs to distinguish and segment up to four individuals in an image. To learn more...

-

21:15

21:15

Optimize machine learning for Metal apps

Discover the latest enhancements to accelerated ML training in Metal. Find out about updates to PyTorch and TensorFlow, and learn about Metal acceleration for JAX. We'll show you how MPS Graph can support faster ML inference when you use both the GPU and Apple Neural Engine, and share how the...

-

25:18

25:18

Use Core ML Tools for machine learning model compression

Discover how to reduce the footprint of machine learning models in your app with Core ML Tools. Learn how to use techniques like palettization, pruning, and quantization to dramatically reduce model size while still achieving great accuracy. Explore comparisons between compression during the...

-

17:35

17:35

Integrate with motorized iPhone stands using DockKit

Discover how you can create incredible photo and video experiences in your camera app when integrating with DockKit-compatible motorized stands. We'll show how your app can automatically track subjects in live video across a 360-degree field of view, take direct control of the stand to customize...

-

23:21

23:21

Improve Core ML integration with async prediction

Learn how to speed up machine learning features in your app with the latest Core ML execution engine improvements and find out how aggressive asset caching can help with inference and faster model loads. We'll show you some of the latest options for async prediction and discuss considerations for...

-

7:52

7:52

Customize on-device speech recognition

Find out how you can improve on-device speech recognition in your app by customizing the underlying model with additional vocabulary. We'll share how speech recognition works on device and show you how to boost specific words and phrases for more predictable transcription. Learn how you can...

-

-

WWDC22 -

16:46

16:46

What's new in Create ML

Discover the latest updates to Create ML. We'll share improvements to Create ML's evaluation tools that can help you understand how your custom models will perform on real-world data. Learn how you can check model performance on each type of image in your test data and identify problems within...

-

25:31

25:31

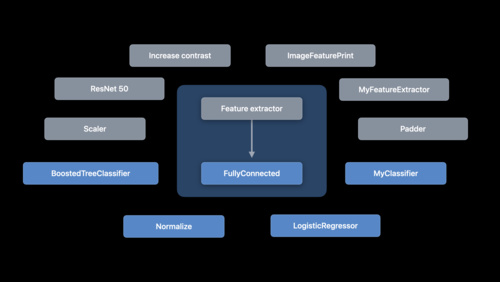

Get to know Create ML Components

Create ML makes it easy to build custom machine learning models for image classification, object detection, sound classification, hand pose classification, action classification, tabular data regression, and more. And with the Create ML Components framework, you can further customize underlying...

-

12:11

12:11

Capture machine-readable codes and text with VisionKit

Meet the Data Scanner in VisionKit: This framework combines AVCapture and Vision to enable live capture of machine-readable codes and text through a simple Swift API. We'll show you how to control the types of content your app can capture by specifying barcode symbologies and language selection...

-

23:30

23:30

Optimize your Core ML usage

Learn how Core ML works with the CPU, GPU, and Neural Engine to power on-device, privacy-preserving machine learning experiences for your apps. We'll explore the latest tools for understanding and maximizing the performance of your models. We'll also show you how to generate reports to easily...

-

13:25

13:25

Compose advanced models with Create ML Components

Take your custom machine learning models to the next level with Create ML Components. We'll show you how to work with temporal data like video or audio and compose models that can count repetitive human actions or provide advanced sound classification. We'll also share best practices on using...

-

19:48

19:48

What's new in Vision

Learn about the latest updates to Vision APIs that help your apps recognize text, detect faces and face landmarks, and implement optical flow. We'll take you through the capabilities of optical flow for video-based apps, show you how to update your apps with revisions to the machine learning...

-

29:51

29:51

Accelerate machine learning with Metal

Discover how you can use Metal to accelerate your PyTorch model training on macOS. We'll take you through updates to TensorFlow training support, explore the latest features and operations of MPS Graph, and share best practices to help you achieve great performance for all your machine learning...

-

17:19

17:19

Explore the machine learning development experience

Learn how to bring great machine learning (ML) based experiences to your app. We'll take you through model discovery, conversion, and training and provide tips and best practices for ML. We'll share considerations to take into account as you begin your ML journey, demonstrate techniques for...

-

-

Tech Talks -

25:18

25:18

Convert PyTorch models to Core ML

Bring your PyTorch models to Core ML and discover how you can leverage on-device machine learning in your apps. The PyTorch machine learning framework can help you create and train complex neural networks. After you build these models, you can convert them to Core ML and run them entirely...

-

23:48

23:48

Explore and manipulate data in Swift with TabularData

Discover how you can use the TabularData framework to load, explore, and manipulate unstructured data in Swift — whether you need to pre-process data for a machine learning task or digest data on-the-fly in your app. Learn how this framework can help you handle large datasets, join multiple...

-

15:48

15:48

Improve Object Detection models in Create ML

When you train custom Core ML models for object detection in Create ML, you can bring image understanding to your app. Discover how transfer learning allows you to build smaller models with less training data. We'll also take you through some of the advanced parameters in Create ML that help you...

-

-

WWDC21 -

36:34

36:34

The process of inclusive design

Discover how you can deliver inclusive apps that can foster amazing experiences for everyone who uses your software. We'll take you through best practices for creating and empowering diverse teams and explore how inclusivity influences every stage of the design and development process.

-

16:49

16:49

Build dynamic iOS apps with the Create ML framework

Discover how your app can train Core ML models fully on device with the Create ML framework, enabling adaptive and customized app experiences, all while preserving data privacy. We'll explore the types of models that can be created on-the-fly for image-based tasks like Style Transfer and Image...

-

19:16

19:16

Discover built-in sound classification in SoundAnalysis

Explore how you can use the Sound Analysis framework in your app to detect and classify discrete sounds from any audio source — including live sounds from a microphone or from a video or audio file — and identify precisely in a moment where that sound occurs. Learn how the built-in sound...

-

17:58

17:58

Detect people, faces, and poses using Vision

Discover the latest updates to the Vision framework to help your apps detect people, faces, and poses. Meet the Person Segmentation API, which helps your app separate people in images from their surroundings, and explore the latest contiguous metrics for tracking pitch, yaw, and the roll of the...

-

26:49

26:49

Classify hand poses and actions with Create ML

With Create ML, your app's ability to understand the expressiveness of the human hand has never been easier. Discover how you can build off the support for Hand Pose Detection in Vision and train custom Hand Pose and Hand Action classifiers using the Create ML app and framework. Learn how simple...

-

32:44

32:44

Discoverable design

Discover how you can create interactive, memorable experiences to onboard people into your app. We'll take you through discoverable design practices and learn how you can craft explorable, fun interfaces that help people grasp the possibilities of your app at a glance. We'll also show you how to...

-

19:12

19:12

Extract document data using Vision

Discover how Vision can provide expert image recognition and analysis in your app to extract information from documents, recognize text in multiple languages, and identify barcodes. We'll explore the latest updates to Text Recognition and Barcode Detection, show you how to bring all these tools...

-

24:30

24:30

Tune your Core ML models

Bring the power of machine learning directly to your apps with Core ML. Discover how you can take advantage of the CPU, GPU, and Neural Engine to provide maximum performance while remaining on device and protecting privacy. Explore MLShapedArray, which makes it easy to work with multi-dimensional...

-

29:12

29:12

Accelerate machine learning with Metal Performance Shaders Graph

Metal Performance Shaders Graph is a compute engine that helps you build, compile, and execute customized multidimensional graphs for linear algebra, machine learning, computer vision, and image processing. Discover how MPSGraph can accelerate the popular TensorFlow platform through a Metal...

-

14:22

14:22

Use Accelerate to improve performance and incorporate encrypted archives

The Accelerate framework helps you make large-scale mathematical computations and image calculations that are optimized for high-performance, low-energy consumption. Explore the latest updates to Accelerate and its Basic Neural Network Subroutines library, including additional layers, activation...

-

-

WWDC 2020 -

26:06

26:06

Build an Action Classifier with Create ML

Discover how to build Action Classification models in Create ML. With a custom action classifier, your app can recognize and understand body movements in real-time from videos or through a camera. We'll show you how to use samples to easily train a Core ML model to identify human actions like...

-

24:21

24:21

Detect Body and Hand Pose with Vision

Explore how the Vision framework can help your app detect body and hand poses in photos and video. With pose detection, your app can analyze the poses, movements, and gestures of people to offer new video editing possibilities, or to perform action classification when paired with an action...

-

24:42

24:42

Use model deployment and security with Core ML

Discover how to deploy Core ML models outside of your app binary, giving you greater flexibility and control when bringing machine learning features to your app. And learn how Core ML Model Deployment enables you to deliver revised models to your app without requiring an app update. We'll also...

-

41:04

41:04

Make apps smarter with Natural Language

Explore how you can leverage the Natural Language framework to better analyze and understand text. Learn how to draw meaning from text using the framework's built-in word and sentence embeddings, and how to create your own custom embeddings for specific needs. We'll show you how to use samples...

-

24:35

24:35

Explore Computer Vision APIs

Learn how to bring Computer Vision intelligence to your app when you combine the power of Core Image, Vision, and Core ML. Go beyond machine learning alone and gain a deeper understanding of images and video. Discover new APIs in Core Image and Vision to bring Computer Vision to your application...

-

11:48

11:48

Build Image and Video Style Transfer models in Create ML

Bring stylized effects to your photos and videos with Style Transfer in Create ML. Discover how you can train models in minutes that make it easy to bring creative visual features to your app. Learn about the training process and the options you have for controlling the results. And we'll explore...

-

36:27

36:27

Explore the Action & Vision app

It's now easy to create an app for fitness or sports coaching that takes advantage of machine learning — and to prove it, we built our own. Learn how we designed the Action & Vision app using Object Detection and Action Classification in Create ML along with the new Body Pose...

-

31:16

31:16

Get models on device using Core ML Converters

With Core ML you can bring incredible machine learning models to your app and run them entirely on-device. And when you use Core ML Converters, you can incorporate almost any trained model from TensorFlow or PyTorch and take full advantage of the GPU, CPU, and Neural Engine. Discover everything...

-

18:39

18:39

Control training in Create ML with Swift

With the Create ML framework you have more power than ever to easily develop models and automate workflows. We'll show you how to explore and interact with your machine learning models while you train them, helping you get a better model quickly. Discover how training control in Create ML can...

-

39:35

39:35

Build customized ML models with the Metal Performance Shaders Graph

Discover the Metal Performance Shaders (MPS) Graph, which extends Metal's Compute capabilities to multi-dimensional Tensors. MPS Graph builds on the highly tuned library of data parallel primitives that are vital to machine learning and leverages the tremendous power of the GPU. Explore how MPS...

-

-

WWDC 2019 -

40:38

40:38

Core ML 3 Framework

Core ML 3 now enables support for advanced model types that were never before available in on-device machine learning. Learn how model personalization brings amazing personalization opportunities to your app. Gain a deeper understanding of strategies for linking models and improvements to Core ML...

-

6:10

6:10

Advances in Speech Recognition

Speech Recognizer can now be used locally on iOS or macOS devices with no network connection. Learn how you can bring text-to-speech support to your app while maintaining privacy and eliminating the limitations of server-based processing. Speech recognition API has also been enhanced to provide...

-

14:34

14:34

Introducing the Create ML App

Bringing the power of Core ML to your app begins with one challenge. How do you create your model? The new Create ML app provides an intuitive workflow for model creation. See how to train, evaluate, test, and preview your models quickly in this easy-to-use tool. Get started with one of the many...

-

14:49

14:49

What's New in Machine Learning

Core ML 3 has been greatly expanded to enable even more amazing, on-device machine learning capabilities in your app. Learn about the new Create ML app which makes it easy to build Core ML models for many tasks. Get an overview of model personalization; exciting updates in Vision, Natural...

-

20:11

20:11

Training Sound Classification Models in Create ML

Learn how to quickly and easily create Core ML models capable of classifying the sounds heard in audio files and live audio streams. In addition to providing you the ability to train and evaluate these models, the Create ML app allows you to test the model performance in real-time using the...

-

15:41

15:41

Training Object Detection Models in Create ML

Custom Core ML models for Object Detection offer you an opportunity to add some real magic to your app. Learn how the Create ML app in Xcode makes it easy to train and evaluate these models. See how you can test the model performance directly within the app by taking advantage of Continuity...

-

15:01

15:01

Building Activity Classification Models in Create ML

Your iPhone and Apple Watch are loaded with a number of powerful sensors including an accelerometer and gyroscope. Activity Classifiers can be trained on data from these sensors to bring some magic to your app, such as knowing when someone is running or swinging a bat. Learn how the Create ML app...

-

39:50

39:50

Understanding Images in Vision Framework

Learn all about the many advances in the Vision Framework including effortless image classification, image saliency, determining image similarity, and improvements in facial feature detection, and face capture quality scoring. This packed session will show you how easy it is to bring powerful...

-

12:05

12:05

Training Text Classifiers in Create ML

Create ML now enables you to create models for Natural Language that are built on state-of-the-art techniques. Learn how these models can be easily trained and tested with the Create ML app. Gain insight into the powerful new options for transfer learning, word embeddings, and text catalogs.

-

10:31

10:31

Training Recommendation Models in Create ML

Recommendation models for Core ML can enable a very personal experience for the customers using your app. They power suggestions for what music to play or what movie to see in the apps you use every day. Learn how you can easily create a custom Recommendation model from all sorts of data sources...

-

29:43

29:43

Creating Great Apps Using Core ML and ARKit

Take a journey through the creation of an educational game that brings together Core ML, ARKit, and other app frameworks. Discover opportunities for magical interactions in your app through the power of machine learning. Gain a deeper understanding of approaches to solving challenging computer...

-

39:19

39:19

Advances in Natural Language Framework

Natural Language is a framework designed to provide high-performance, on-device APIs for natural language processing tasks across all Apple platforms. Learn about the addition of Sentiment Analysis and Text Catalog support in the framework. Gain a deeper understanding of transfer learning for...

-

38:15

38:15

Text Recognition in Vision Framework

Document Camera and Text Recognition features in Vision Framework enable you to extract text data from images. Learn how to leverage this built-in machine learning technology in your app. Gain a deeper understanding of the differences between fast versus accurate processing as well as...

-

41:25

41:25

Metal for Machine Learning

Metal Performance Shaders (MPS) includes a highly tuned library of data parallel primitives vital to machine learning and leveraging the tremendous power of the GPU. With iOS 13 and macOS Catalina, MPS improves performance, enables more neural networks, and is now even easier to use. Learn more...

-

57:45

57:45

Designing Great ML Experiences

Machine learning enables new experiences that understand what we say, suggest things that we may love, and allow us to express ourselves in new, rich ways. Machine learning can make existing experiences better by automating mundane tasks and improving the accuracy and speed of interactions. Learn...

-